This is Tanami from the CG team.

The production of short films used to be a field that required expensive equipment and specialized skills. However, thanks to the evolution of AI services, even I can easily create video works. It has been a while since I last edited videos back in my student days. In this blog, I would like to introduce five AI services and the process that I utilized when creating my short film.

Here is the blog post about creating a short film for an event:

Create an event promotion video in 3 hours: Generating videos from images using Runway Gen-2, an AI video generation tool (written in Japanese)

Here is the video I created this time. The concept of the short film is derived from the brand concept of "accelerando.Ai," and it was developed by myself.

The concept of this "accelerando.Ai" short film is "beginning."

I focused on the commencement of a fashion brand that embodies the future, evolution, sophistication, and innovation.

AI services used in this project:

1. Music recommendation AI agent: Maison AI - ChatGPT

2. Music generation AI: Soundraw

3. Image generation AI: Midjourney

4. Image generation AI (using fill-in feature): Photoshop(Beta)*

5. Video generation AI: Runway Gen-2 (this time used for image-to-video generation)

6. Video editing: Runway

*After posting this blog, the fill-in generation feature was added to the standard version of Photoshop. It is now available for commercial use. If you'd like to try it out, please use that version.

tuika Photosh生成塗りつぶし機能op

1. Music recommendation AI agent → Maison AI - ChatGPT

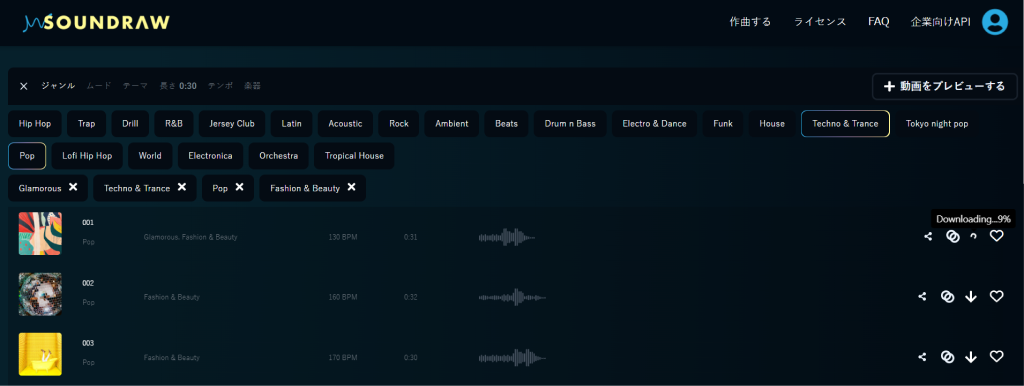

I find it easier to edit videos if the music is decided beforehand, so I started by creating a track. At that time, I built an AI agent for sound creation on MaisonAI and got suggestions for options to select when creating music on Soundraw.

Although Soundraw allows music to be generated by simply selecting a genre and mood, I'm not very knowledgeable about music and was unsure which genre to choose.

I asked for a recommendation for a song for a "30-second video of a futuristic, speedy, mysterious, cool, and exciting fashion brand".

MaisonAI has an AI agent that remembers all the options you can select on Soundraw and proposes from them.

MaisonAI has an AI agent that remembers all the options you can select on Soundraw and proposes from them.

2. Music generation AI → Soundraw

I selected the genre suggested by the AI agent and listened from the top. I adjusted the volume of the song I liked and downloaded it. With a free account, you cannot download, but you can try generating music. I personally found Soundraw's official YOUTUBE tutorial very helpful, so check it out if you're interested.

3. Image generation AI → Midjourney

On Midjourney, I generated images close to the "accelerando.Ai" image in a 16:9 ratio. By the time I used Runway Gen2, I had a good idea of which images were compatible, so I generated images in a compatible composition. The "--ar 16:9" is the aspect ratio parameter for generating images on Midjourney.

For more about Midjourney, please refer to the OpenFashion blog.

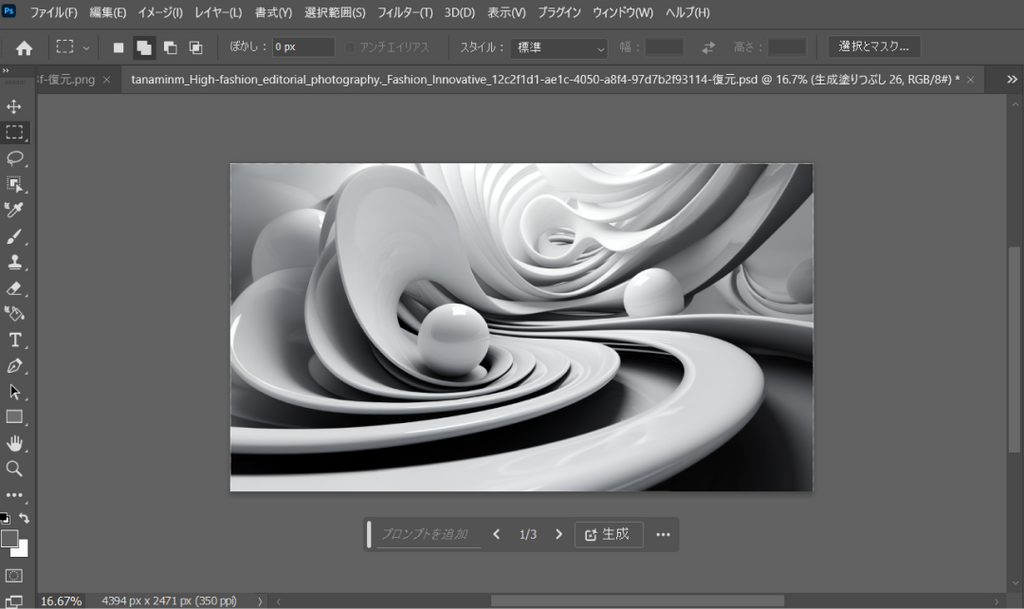

4. Image generation AI (using fill-in feature) → Photoshop(Beta)

*After posting this blog, the fill-in generation feature was added to the standard version of Photoshop. If you'd like to try it out, please use that version.

As I mentioned earlier, for images I liked that weren't generated in the 16:9 aspect ratio, I used Photoshop (Beta) to fill in and make them 16:9.

I prepared a canvas in "1920:1080", selected the blank spaces on both sides, and did a fill-in without prompts.

After converting several images to 16:9 this way, I took them to Runway.

5. Video Generation AI → Runway Gen-2

Runway offers two types of video generation: Gen-1, which generates videos from prompts (text), and Gen-2, which generates videos from images + prompts. For this project, I used Gen-2.

Typically, I prepare a 16:9 image and generate a video in Runway Gen-2 without any prompt input. The process involves evaluating the results of the video generation and selecting the ones that work best for the short movie.

In Runway Gen-2, a 4-second video is generated from a single image, but recently this has been expanded to generate up to 16 seconds of video by repeating this process four times.

A new feature called MotionSlider has been added, allowing users to adjust the intensity of motion in the bottom-left "Motion" section.

Setting a higher value results in more significant motion. In this project, I set the value between 3 and 4 to minimize the motion. I haven't fully tested it, but I felt that slowing down the motion might reduce distortions.

There are tutorials available on the official Runway academy and Instagram, so please check them out.

*Note: After the blog was posted, a "Custom camera control" feature was added, allowing for camera movements such as horizontal, vertical, zooming in, zooming out, and rotation. As new features are continually being added, I'm looking forward to trying out this new capability.

From the dozens of images I tested for video generation, I've summarized the types of original images that I felt had good compatibility (i.e., minimal discomfort when viewed by humans). If you're planning to generate videos from images using Runway Gen-2, this might serve as a reference:

| Image Type | Compatibility |

| Abstract art images | ◎ |

| Scenic images | ◎ |

| Close-up of people (clearer is better) | ◎ |

| Upper body of people | 〇 |

| Full body of people | △ |

Abstract art images:

These were used in the final short movie. The videos generated from these images felt especially natural.

Scenic images:

These also generated videos with minimal discomfort.

Close-up of people:

Clear close-up images of people, like the one at the end of the finished short movie, were generated into videos without much discomfort, regardless of the angle.

Upper body of people:

Honestly, this is something you won't know until you try. About 60% of the time, videos seem to be generated without much discomfort. Front-facing images tend to feel more natural. However, when generating from a diagonally sideward-facing image this time, the resulting image shifted significantly, so it wasn't included in the short movie.

The second image had minimal discomfort, so it was adopted in the short movie.

Full body of people:

I often felt like the AI might be having difficulty distinguishing between the front and back of a person. There were instances where a forward-facing figure would rotate its face to appear backward, producing movements that seemed unnatural to humans.

One particular image changed so significantly when converted into a video that it wasn't included in the short movie.

Other unpredictable items:

While the white short boots in the short movie generated a video without much discomfort, the long boots I tried before fused together, resulting in unexpected motion. There are aspects of how the AI decides that remain unpredictable without testing.

The videos generated from these six types of images have been included as references.

6.About Editing

I used the video editing function available in Runway. I wanted to try "AI BeatSnapping", which makes it easier to align videos with music.

While I mainly edited myself, Runway offers "AI Magic Tools" that allow for "GreenScreen", "Inpainting", and "MotionTracking".

A tip might be to slow down AI-generated videos that start to feel off midway and cut out the uncomfortable sections.

If you're editing for the first time, "Clipchamp" might be easier to use. It had many readily available motions, effects, and templates.

Conclusion

- One can create a short movie in a few hours using AI services.

- Some images are better suited for video generation.

- Video generation usually follows the evaluation of generated results.

- Motion graphics videos are quite usable.

- The speed of feature additions is impressive, and there's much to look forward to!

Final Thoughts

Thank you for reading to the end.

Editing a video after such a long time was fun.

In my student days, we split into three teams, each working on the video little by little after school:

- The filming team (edited with Adobe Premiere)

- The motion graphics team (edited with Adobe AfterEffects)

- The music production team (I've forgotten the specifics)

I was amazed that what took a team back then could now be done by one person in a few hours.

Especially, videos from abstract images seem easy to use as motion graphics. And regarding music production, while it's a field that requires knowledge, I was able to produce something suitable just from an image.

With the rapid advancement of AI services and technology, what one person can achieve has expanded significantly. However, having a sense of what feels "right" seems crucial to make the most of these services.

After creating the short movie, I strangely felt like watching more films, listening to more music, and reading more magazines.

While some might wonder, "Do you really need to use five AI services?", most of these services offer free trials. So, I encourage you to give them a try.

![[Easy on Your Smartphone] How to Create ZEPETO Items with Maison AI](http://open-fashion.com/cdn/shop/articles/387ac76082623e94218de7c076a87675.jpg?v=1712232721&width=533)